一句话总结,即将数据集分为k个类别。原理:1、首先选取k个初始点作为中心;然后遍历所有的点,对于每一个点,计算k个中心中到这个点距离最小的中心,然后将这个点划分到这个中心;3、当所有的点划分完毕,重新计算类别的计算中心(将均值作为中心),直到中心点不再变化。但是这种方式可能陷入局部最小解,为了克服这个缺陷,提出了二分k-means算法,这种方式类似于二分法。即先划分两类,然后,再将其中一类划分为两类,这样就扩展到三类,一次类推。对于到底选取哪一类进行扩张,衡量刻度是到质心的总距离,选取最小的那一个。

一、应用场景

这是一种无监督的学习,一般用来划分,实际生活中常常遇到,比如村落的划分,经常是把村落聚集的一个划分为一个村落。同样比如美国的大选,对于某一些地区的选民,很显然有一部分选民很大程度上是一致的态度,如果能找到他们这一个群里,汇集起来,就可以专门针对,来进行宣传。同样,对于推荐系统等,找到某一类共同特制的人群,也可以进行定点推销。

二、聚类算法

由于原理简单,只是动手做了原理部分,没有亲自实现案列部分。

代码:kMeans.py

1 | """ |

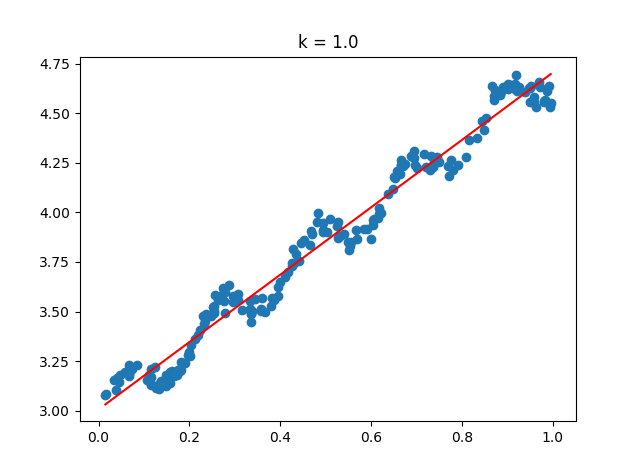

画图代码:plotTest.py

1 | """ |

测试代码:test.py

1 | """ |